- Part one looked at user profiles. Generally, the more you fill out your profile (description, avatar, background image etc), there seems to be a correlation with increased number of followers; and high-status description terms (‘entrepreneur’, ‘author’, ‘speaker’ etc) perform better than, er, low status ones (‘student’, ‘nerd’ etc).

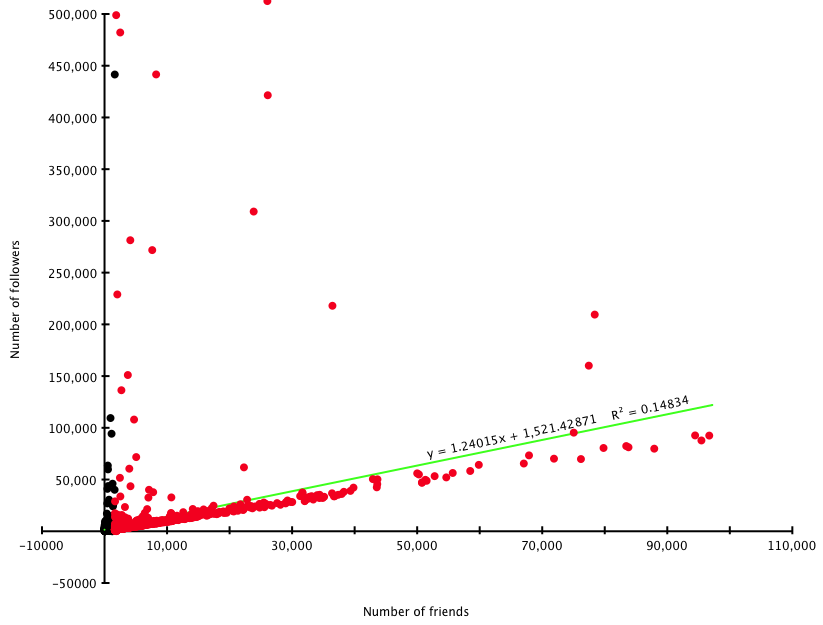

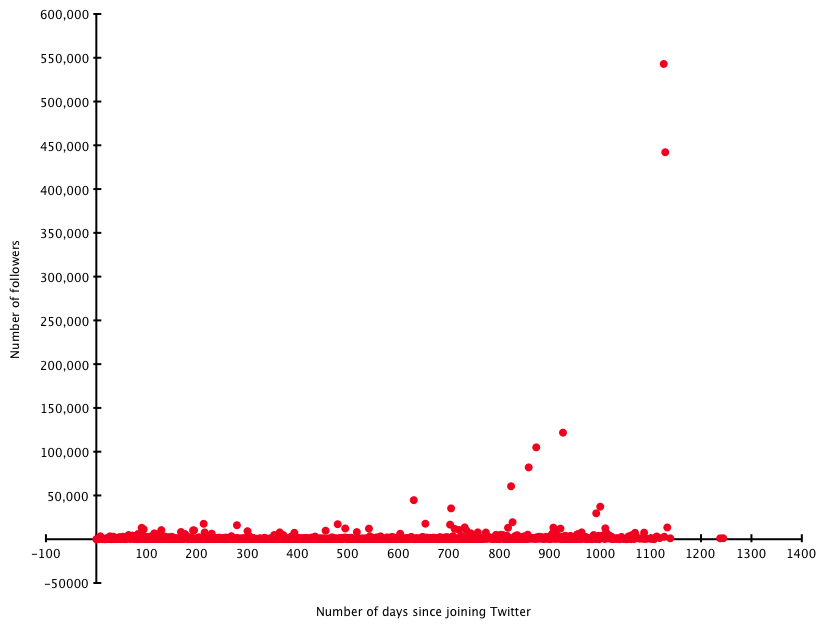

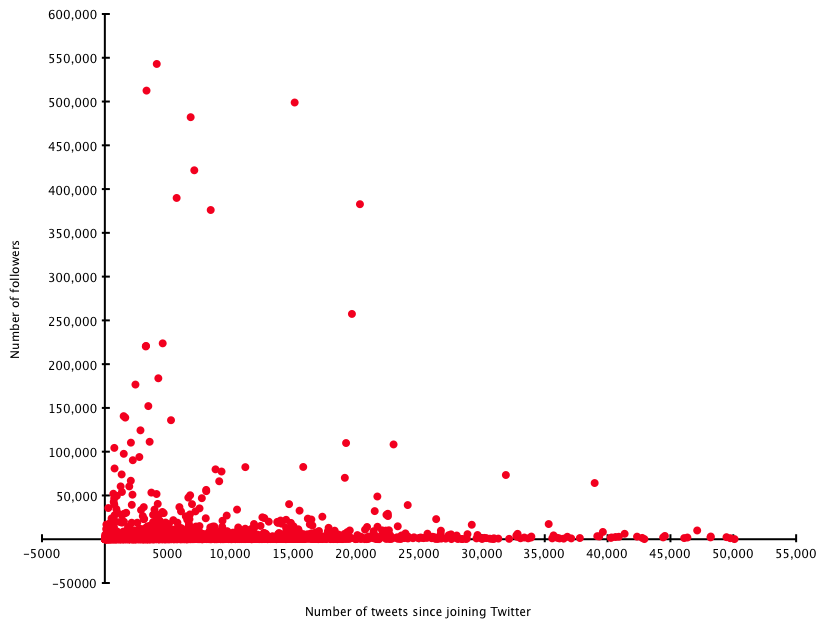

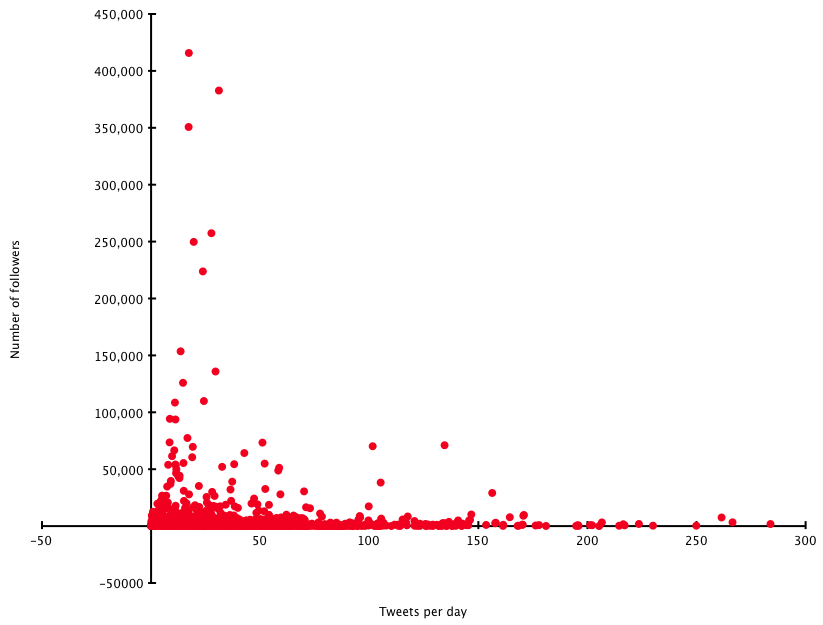

- Part two discussed friends counts, and frequency of tweeting. There is an unsurprisingly close correlation between the number of friends you have and the number of followers; and you’re better off tweeting less than 30 times a day to avoid putting off followers. (Remembering always that correlation doesn’t mean causation, fact fans!)

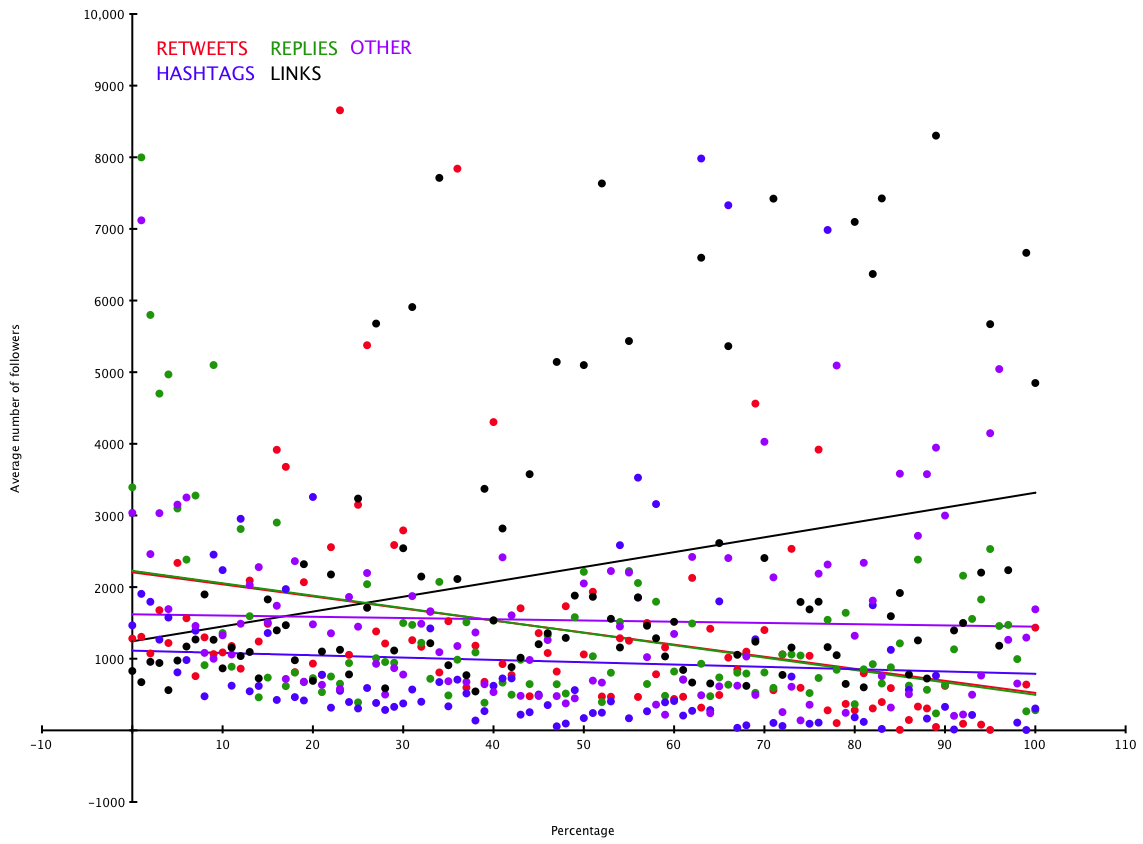

Twanalyst also records data on the ‘type’ of tweets people write. It divides them into five categories:

- Replies/mentions – anything beginning with a @ goes into this pot (mean 35% median 34%)

- Retweets – ie simply retweeting others’ content (with RT as the flag) (mean 5% median 1%)

- Links – tweets that contain web links pointing elsewhere (mean 16% median 9%)

- Hashtags – tweets that use a hashtag to participate in some group activity (mean 3% median 0%)

- Everything else – ie just normal tweets that aren’t any of the above (what people had for lunch, random witticisms, or whatever) (mean 41% median 37%)

Obviously in reality these categories aren’t so discrete, but let’s live with that and assume everything falls into one or another. Twanalyst records each as a percentage of total tweeting output (it analyses the most recent 200 tweets).

Expressed as a graph of these percentages against average follower counts for each percentage point (I’ve chopped off a few extreme values due to accounts with hundreds of thousands of followers):

The ‘lines of best fit’ are not hugely precise, but in broadly speaking it seems that there is a slight correlation between tweeting links and higher follower counts – people are interested in accounts which gather interesting stuff from elsewhere and tweet about it. The other values don’t really have any strong correlations.

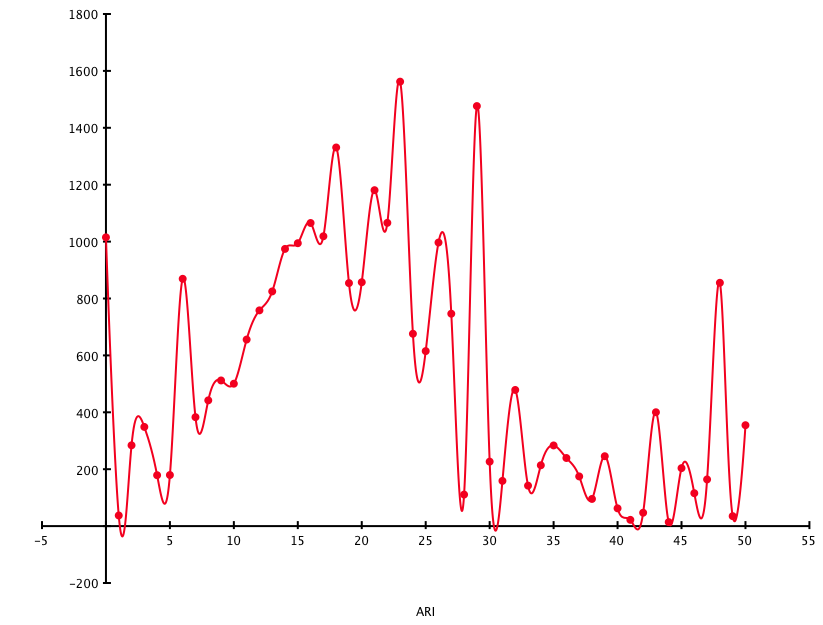

One final analysis. Twanalyst also calculates a user’s Automated Readability Index – ie a rough measure of the simplicity or complexity of the language they use. A figure of between 6 and 12 represents ‘normal’ prose: below is simplistic and much above enters the realm of obscurantism. (It should be noted though that because tweets often contain links, odd hashtags and so on, the ARI figure is of necessity a bit vague.) Here’s ARI (chopped off at 50, and ignoring twitter accounts with more than 100,000 followers) measured against average follower counts for each data point:

Not much to add here, except the obvious: very simple and very complex writing styles seem to put people off (apart from an odd blip at ARI=48), but a reasonably level of complexity may actually be popular. Or it may all be coincidence. Over and out!